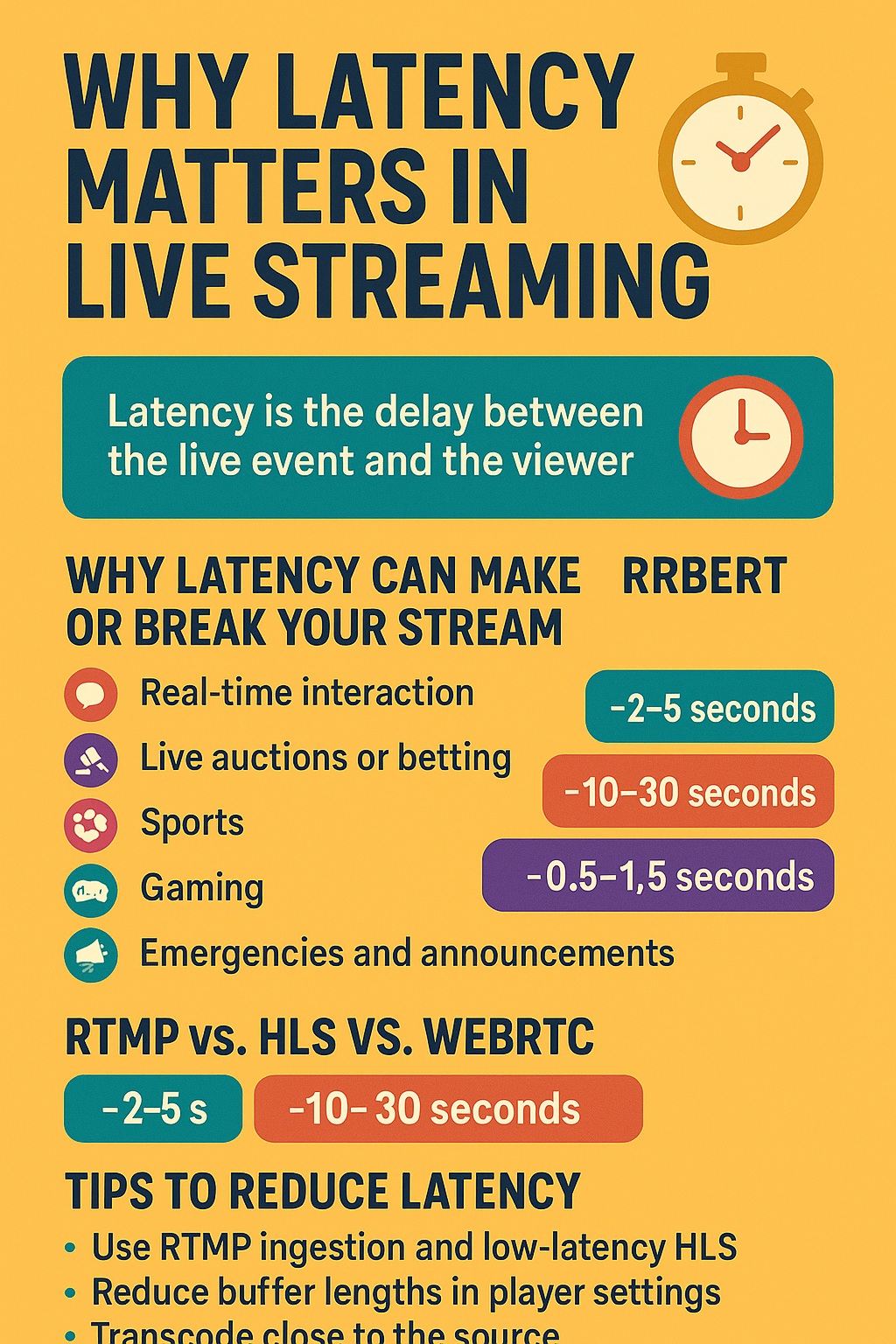

In live streaming, latency is one of the most overlooked yet critical elements. To the average viewer, a few seconds may not seem like much, but in practice, those seconds can mean the difference between a smooth experience and a disaster.

If you’ve ever shouted “Goal!” before your friend even saw it on their screen, you’ve experienced the reality of streaming latency.

Let’s dive into what latency is, why it matters, and how different technologies affect it.

What is latency in live streaming?

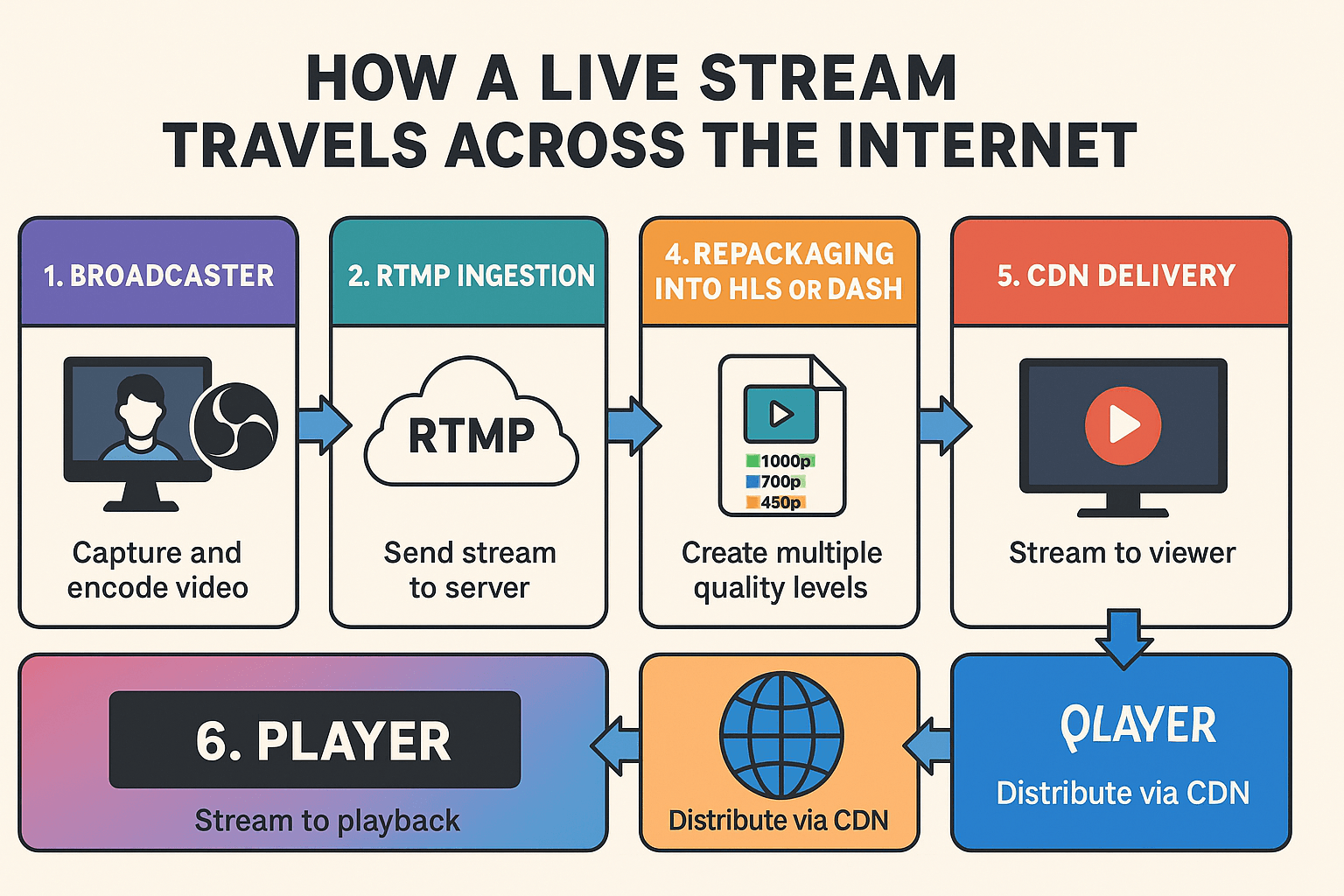

Latency is the delay between the moment something happens in real life and the moment the viewer sees it on their screen. In streaming, latency is introduced at several points:

- Encoding (compressing your video)

- Transmission (sending the stream to the server)

- Transcoding and repackaging (for different formats and bitrates)

- CDN caching and delivery

- Playback buffering

Altogether, these steps add up. Most live streams have 10 to 30 seconds of latency between action and playback.

Why latency can make or break your stream

Here’s why latency is more than a technical detail:

- Real-time interaction: If you’re taking questions, reading chat, or hosting a quiz, latency breaks the flow. A viewer’s comment might arrive after you’ve moved on.

- Live auctions or betting: Delays give some viewers an unfair advantage, potentially even causing legal or financial issues.

- Sports: Nobody wants to hear a neighbor celebrate a goal before they see it.

- Gaming: For esports or Twitch streams, low latency creates more immersive and responsive experiences.

- Emergencies and announcements: In sensitive contexts like weather alerts or political events, delays can cause confusion or misinformation.

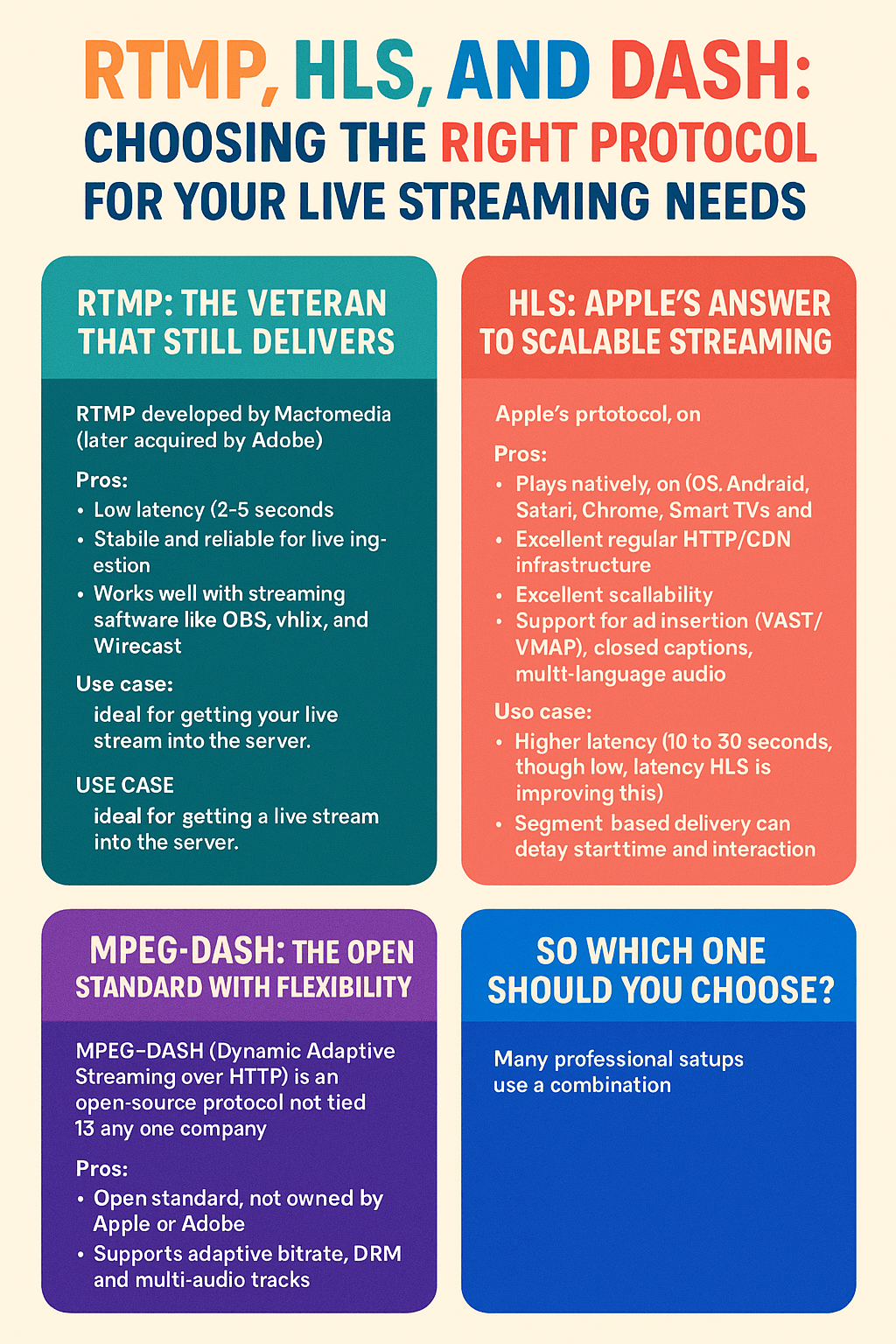

RTMP vs. HLS vs. WebRTC: the latency battle

Let’s compare the major protocols by latency:

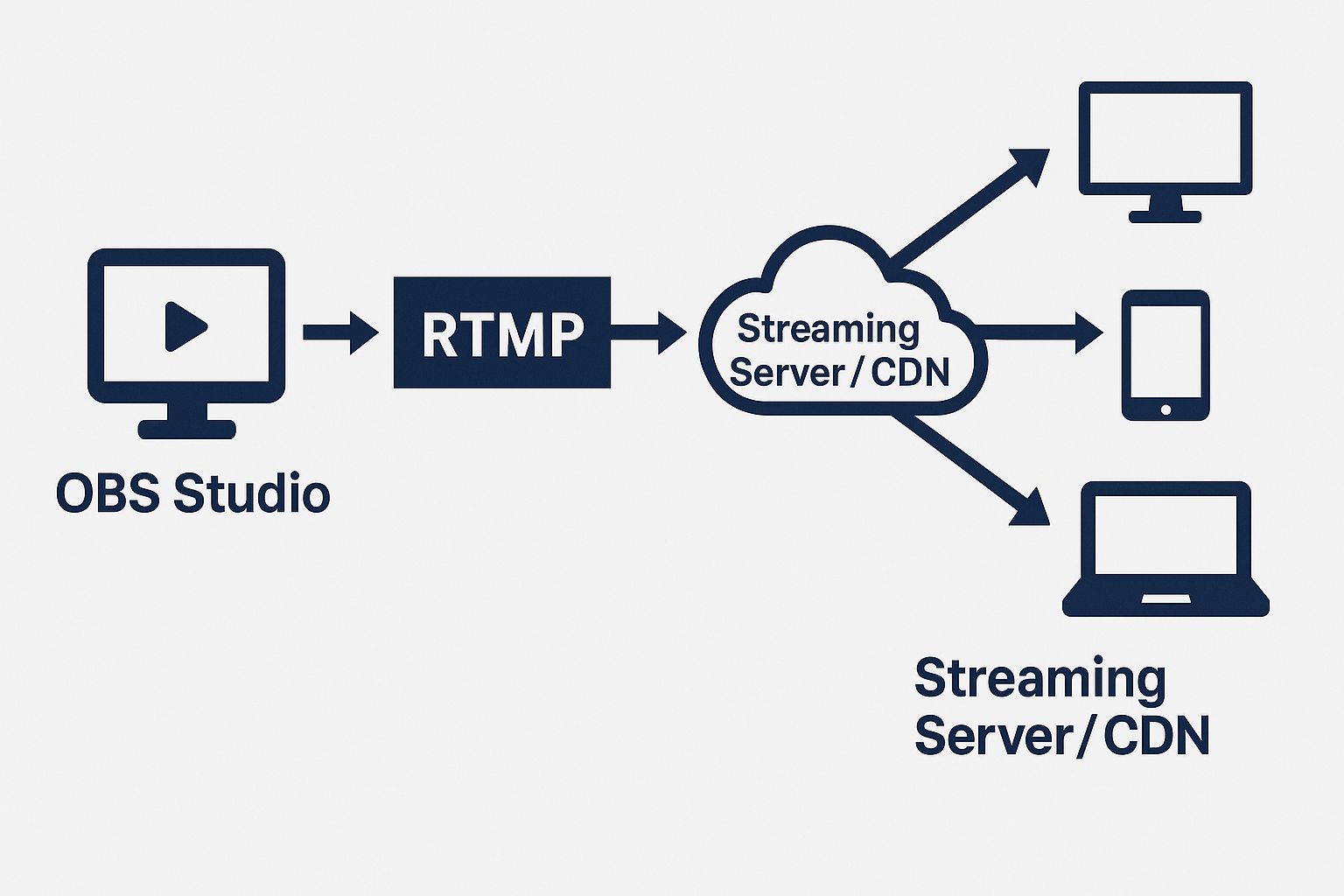

- RTMP: ~2 to 5 seconds. Very good for sending the stream to the server but rarely used for playback now.

- HLS: ~10 to 30 seconds. Most widely supported format, but has the highest latency.

- Low-Latency HLS (LL-HLS): ~3 to 7 seconds. A newer version of HLS with chunked transfer and improved buffering.

- DASH: Similar to HLS in latency, unless configured with low-latency extensions.

- WebRTC: ~0.5 to 1.5 seconds. The best option for ultra-low latency—but hard to scale.

There’s no perfect solution. It depends on your goals.

Can you really stream with zero delay?

Zero latency? No. Even traditional TV has 2–5 seconds of delay. But with WebRTC and low-latency HLS, you can get impressively close.

The trade-offs often come down to:

- Scalability

- Device compatibility

- Cost of infrastructure

- Interactivity requirements

A global concert stream for 100,000 viewers doesn’t need 1-second latency. But a two-person auction platform does.

Tips to reduce latency in your setup

If you want to minimize latency without breaking your setup, here are a few suggestions:

- Use RTMP ingestion and low-latency HLS for playback

- Reduce buffer lengths in your player settings

- Transcode close to the source (edge computing)

- Avoid unnecessary CDN hops

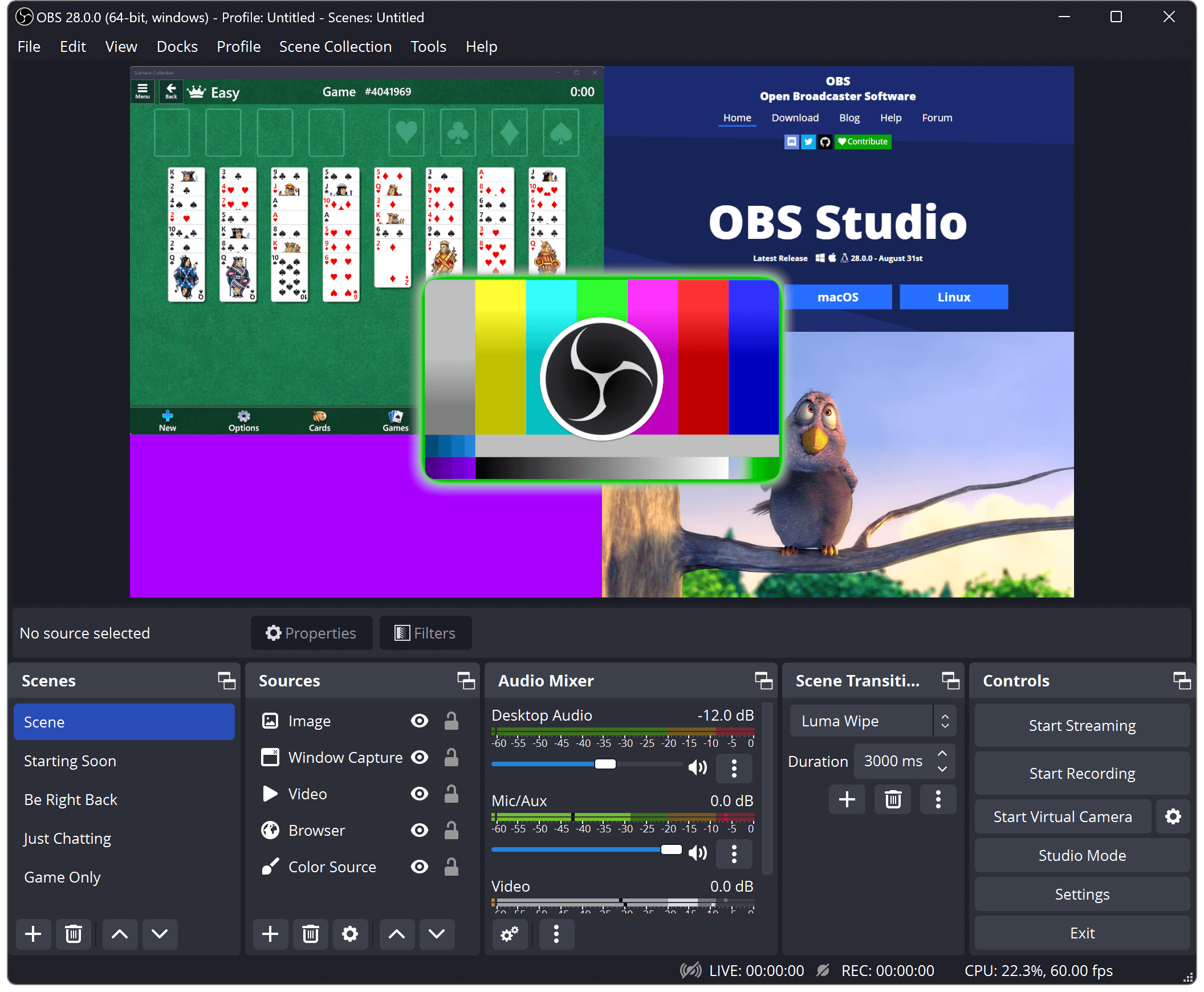

- Use a dedicated encoder with tuned latency settings (like OBS or vMix)

Also, monitor your latency in real-time and adjust your player’s behavior accordingly.

Final thoughts

Latency isn’t just a number—it’s a viewer experience. Too much delay kills interactivity, spoils surprise, and frustrates users. While some delay is unavoidable, optimizing for lower latency gives you a real competitive edge.

In 2025, the tools are better than ever. Whether you use RTMP + HLS or WebRTC for critical applications, understanding latency puts you in control of your stream’s quality—and your audience’s satisfaction.